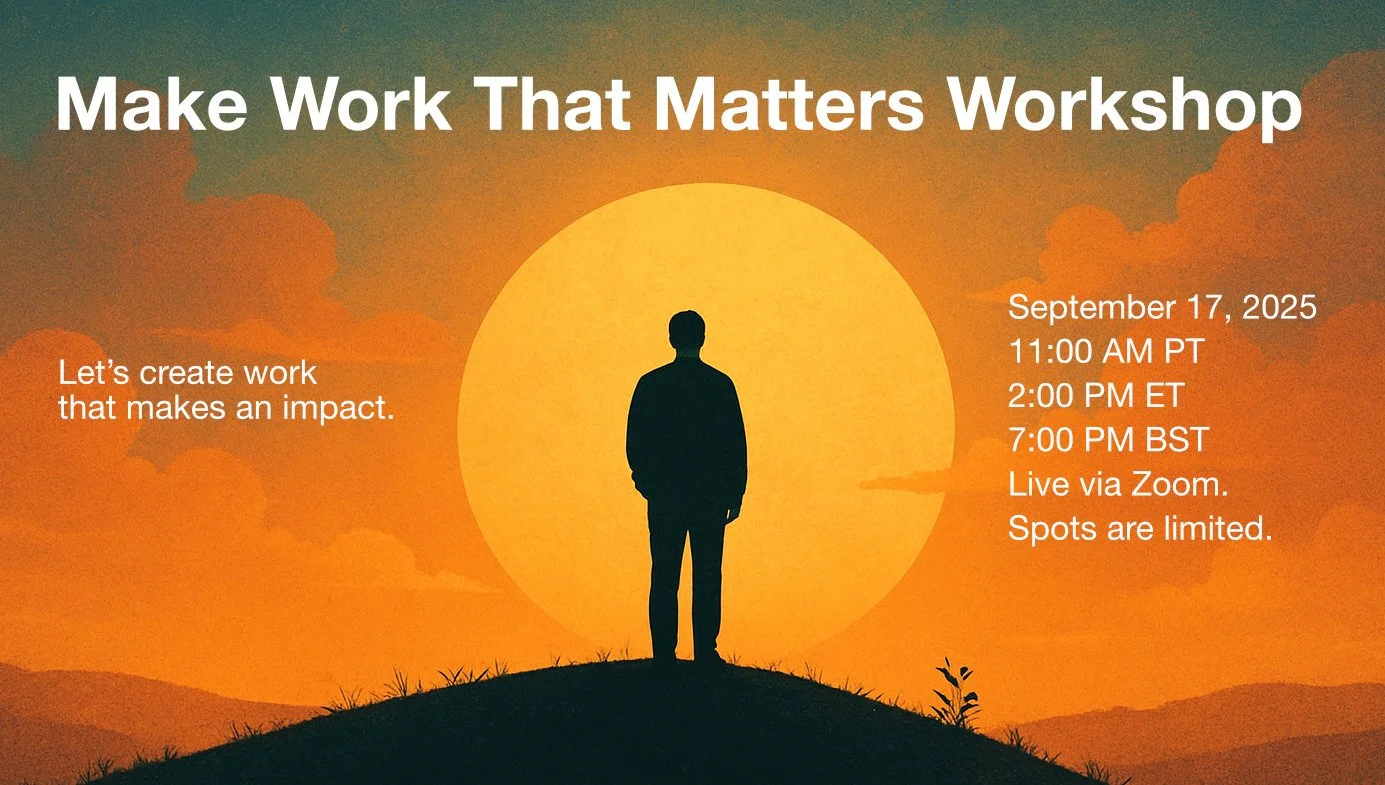

Free Meaning Webinar Tomorrow

Join me tomorrow for the Make Work That Matters workshop!

In this one-hour Zoom session, we'll explore how to create work that makes an impact. I'll share key ideas, we'll do some exercises to put them into practice, and we'll learn from each other through discussion. By the end of the session, you'll leave with fresh energy and a clearer sense of how to make work that matters.

This session is free!

Finding Meaning in The Pitt

The Pitt is a trashy-but-great medical drama that, surprisingly, has a lot to teach us about finding meaning in our work and lives.

If you want to take the next step, register for my FREE Make Work That Matters workshop. Together, we’ll explore how to find and create meaning in your work.

September 17, 2025

11:00 AM PT / 2:00 PM ET / 7:00 PM BST

Live via Zoom — spots are limited.

Transcript

The Pitt is a medical drama that's compressed into a single ER shift, which we experience hour by hour. It's an endless night of blood, grief, and impossible choices.

The staff are subjected to an endless onslaught of suffering and death. They never have enough time or resources to do the job as well as they’d like, and they’re constantly downstream from problems they’re powerless to solve.

And yet, mostly they endure.

How? Why keep going when everything says stop?

They keep going because this work matters, because it has meaning.

Meaning might seem simple, but it is not at all.

And this soapy, slightly trashy but also great TV show actually can teach us a lot about finding meaning and creating meaning.

In my last video, Infinite Remix, I asked: “What should we make, when we can make anything?” The answer is we make work that matters.

And making work that matters means you need to find meaning and create meaning.

So... what is meaning?

The writer Emily Esfahani Smith identifies four pillars of meaning: belonging, purpose, storytelling, and transcendence.

Purpose

Let's start with purpose, because in The Pitt the purpose is clear.

This is emergency medicine: save who can be saved, help who can be helped, protect the team, hold the line.

This kind of stark purpose goes a long way toward creating meaning.

The character Cassie McKay probably didn't have meaning in her life for a long time. She came to medicine later in life after being an addict. She's lost custody of her son. She wears an ankle monitor because of an ugly fight with her ex's girlfriend.

Emergency medicine for her is an answer. She’s chosen medicine to stitch her own life back together while she stitches others' bodies back together.

That's purpose.

Purpose is a box that everybody on The Pitt has ticked, no problem.

And for the rest of us, purpose doesn't need to be as dramatic as saving lives. Purpose can be about contributing to communities, caring for others, bettering ourselves, and following our principles.

But purpose alone won't carry you. We also need belonging.

Belonging

Belonging means being valued for who you truly are. Smith contrasts this with “cheap belonging,” where your value depends on conforming or maintaining certain beliefs. True belonging is about being valued for the real you.

Belonging is about feeling seen, recognized, and cared for by others, and returning that care in kind.

The character Trinity Santos rubs everybody the wrong way at first. She's loud, abrasive, all edge. She’s there to prove herself, not to belong.

But as her flaws and strengths become apparent, the team begins to see her resilience and honesty, and she’s slowly woven into the group. She softens a little, but more importantly, she’s valued for who she really is.

Belonging is incredibly basic, human stuff, but it is undervalued in this hyper-individualistic age.

But belonging, too, can be fragile. It can falter, when it does we need to lean on another pillar: story.

Storytelling

Storytelling is our narrative that makes sense of events and our identity.

The Pitt is, of course, a story, but the characters are also telling each other stories and themselves too.

Nurse Dana Evans was born at the hospital she now works at and volunteered there as a teenager. It is her place in this world. But after getting sucker-punched, that story shatters, and she doubts she can go on.

She returns, because her story is that she’s the one who keeps the floor alive. And that story buys her some time so that she can find a new story that can keep her going beyond today. We'll see where this leads in Season 2.

But even all three of these pillars might not be enough. Sometimes they all collapse and the only thing left is the final pillar of meaning. transcendence.

Transcendence

Transcendence is found in moments that lift us beyond the self into something vast and dignifying. Transcendence gets you through when your defeat is total and there are no other answers.

In The Pitt transcendence arrives for the lead character Michael "Robby" Robinavitch after the death of his stepson's girlfriend at a mass shooting. He sits in children's unit that's been turned into morgue and recites the Shema (shuh-ma), one of the central prayers of Judaism. For a moment, his grief is held inside something older, deeper, and more vast than this hospital.

Transcendence is the hardest part of meaning for many of us to find. It's mostly been the dominion of religion and and lot of us are no longer religious.

But you can find transcendence elsewhere. You can find it in beauty, in awe of nature, in the flow of creation, in gratitude, and in rituals, even simple ones like shared meals or book clubs.

Transcendence is probably something you get from outside your work. Leave space in your life so you can find it. Because when things are at their worst, transcendence is what can get you through.

The four pillars of meaning each have a role in making our work and lives matter.

Purpose gives you direction. Belonging gives you connection. Storytelling makes it make sense. And transcendence lifts us beyond ourselves.

The Pitt shows how the components of meaning don’t arrive in order, and they don’t stay put. You need to find them over and over again.

So to make work that matters, answer these questions for yourself.

What purpose will you serve?

Who will you belong to?

What story will you tell?

What will you reach for beyond yourself?

The Pitt is not only about saving patients. It’s about saving meaning, hour by hour, person by person, so the work can continue, and so that your life can be worth its cost.

Why Most Work Feels Empty and How to Fix It

What do we make when we can make anything? Meaning.

In my last video, Infinite Remix, I asked: “What should we make, when we can make anything?”

The answer is: we make work that matters.

Making work that matters means that your work has meaning.

Sounds straightforward. Except, like… what is meaning?

The writer Emily Esfahani Smith identifies four pillars of meaning:

Purpose

Belonging

Storytelling

Transcendence

Purpose

Purpose is knowing your work matters beyond yourself and beyond the moment.

Sometimes the purpose of your work is obvious: teachers guide students, firefighters protect lives, farmers grow the food we eat.

But for many of us, purpose might be less of a slam dunk. Not all work gives us a clear sense of impact. But you don’t have to rock every one of these categories. You can complement your sense of purpose with areas outside of your occupation.

Purpose can be about contributing to communities, caring for others, bettering ourselves, and following our principles. Or it might simply be about serving your family.

Purpose directs us forward. But direction alone isn’t enough.

Belonging

Belonging is being valued for who you truly are. Smith contrasts this with “cheap belonging,” where your value depends on conforming or maintaining certain beliefs. True belonging is about being valued for the real you.

Belonging is about feeling seen, recognized, and cared for by others, and returning that care in kind.

Belonging is incredibly basic, human stuff, but it is undervalued in our hyper-individualistic age.

And sometimes, belonging can falter. When that happens, we lean on the next pillar.

Storytelling

Storytelling is how we make sense of our lives. It’s the narrative we tell ourselves that explains who we are, where we’ve been, and where we’re going. Without a story, our life is a series of disconnected events. With a story, those events form an arc that helps us understand ourselves and persevere through hardship.

With story, life feels coherent, valuable, and survivable.

But even story has its limits. Some moments defy explanation. That’s when we need transcendence.

Transcendence

Transcendence is found in moments that lift us beyond the self into something vast and dignifying.

Transcendence is the hardest part of meaning for many of us to find now. In a world that often flattens everything into productivity and entertainment, transcendence reminds us we are more than consumers and workers.

Religion provides transcendence for many, but you can find it elsewhere. You can find transcendence in beauty, in awe of nature, in the flow of creation, in gratitude, and in rituals, even simple ones like shared meals or book clubs.

Transcendence will likely be something you find outside your work. Leave space in your life so you can find it. Because when things are at their worst, transcendence is what can get you through.

Meaning is for humans only

AI can’t do any of this. It can remix, generate, and simulate, but it can’t create meaning. That’s our language alone.

As AI makes it possible to create almost anything, the question shifts from what to make to why. My hope is that AI clears away drudgery so we can focus on the parts of work that matter. I’m trying to play a small role in making that happen.

The questions you must answer

The four pillars hold us up in different ways:

Purpose gives you direction.

Belonging gives you connection.

Storytelling makes it make sense.

And transcendence lifts us beyond ourselves.

So if you want to make work that matters, keep asking:

What purpose will I serve?

Who will I belong to?

What story will I tell?

What will I reach for beyond myself?

Meaning isn’t something you solve once. It’s something you build and rebuild. You’ll revisit these pillars again and again as life shifts beneath you.

Making work that matters doesn’t just keep you moving forward. It makes the journey worthwhile.

Infinte Remix: The Ghibli Moment

Infinite Remix: What happens when AI starts to create?

Watch it now on YouTube. Like, share and subscribe.

Infinite Remix: The Ghibli Moment Transcript

We uploaded everything. Every song, every selfie, every secret.

And now AI is remixing all of it.

And we are astonished... and confused... and angry.

But this is only the latest chapter in a very old story.

We’ve always remixed. Nothing begins from scratch, everything begins from something else. Every melody, every shot, every move, gets passed on, sampled, transformed, made new.

But now there's a plot twist. And this plot twist is upending our ideas of creativity, ownership, and meaning — it hits hard.

Every second of every day, machines are remixing everything we've ever uploaded.

So we can now tap into our collective imagination and orchestrate it. This is called generative AI, gen AI: ChatGPT, Veo, Sora, Midjourney, Claude, and a flood of others.

Gen AI is simultaneously amazing. And so awful.

It's useful and exciting. It's also invasive and empty.

This is the AI experience. It's a warp speed journey through wonder and horror.

For better or for worse, AI is the new creative frontier. And it is the terrain we will navigate together.

I'll show you the big picture of what's happening.

What this means for your creative future.

When to embrace these tools.

When to resist them.

And most importantly: what should we make, when we can make anything?

Welcome to Infinite Remix.

My name is Kirby, and like you, I'm trying to figure out what it means to be creative when machines can create.

Let's start with the big picture. This all started 30 years ago.

The Great Upload

In the nineties, we began uploading ourselves, one kilobyte at a time.

We're emailing. We're chatting. We're blogging. There were a lot of cats involved.

We want to find our people. See what they’re doing. Show what we can do.

We want to matter, even if it's just for a second.

Then this happened.

We get the internet on our phones. We don't just go online anymore. We live there.

We upload every joke, every opinion, every stray thought. We confess, console, insult, perform, and sell and sell and sell.

Anything that's not bolted down becomes data.

In just a few decades, we sent hundreds of billions of terabytes upward into the cloud.

This was The Great Upload.

It's miraculous. It's monstrous. It's transcendent. It's radioactive.

We love it. We fear it. It changed us.

And for a while—not very long, really—it seemed like that was the whole story. We had this endless horizon of human creativity to explore and add to.

But then... the upload started to... I guess, dream?

This happened because of something called deep learning. Deep learning watches everything we’ve made, learns the patterns, and predicts what should come next.

It doesn’t feel, it doesn’t care. It predicts. But it’s so good at this that it can sort of create.

Deep learning transformed The Great Upload into generative AI. Not just an archive, but a remix engine.

When it works, gen AI feels like magic. It can pull ideas from thin air, remix your thoughts in ways you never imagined, and give you raw material that sparks something new.

Gen AI is creatively exciting. People are doing things we've never seen before. The results are hilarious, surreal, disturbing, sometimes beautiful.

And gen AI is just fun.

You can make your kid a Pixar character.

You can create a realistic video of whatever goofy thing you can think up.

And the cat memes have risen to new heights.

But of all the AI trends that went viral, only one felt like it truly meant something, only one touched something sacred. This was The Ghibli Moment.

The Ghibli Moment

I strongly feel that this is an insult to life itself.

Hayao Miyazaki

"An insult to life itself." This is what Hayao Miyazaki said in 2016 after watching an AI-generated animation.

But the truth is, Miyazaki was talking about something a lot more important than AI art.

Studio Ghibli and its visionary cofounder Hayao Miyazaki create highly stylized, densely human films that are widely beloved.

Their aesthetic requires painstaking manual labor. This four-second shot took 15 months to animate.

AI art, on the other hand, is fast, easy, and very good at mimicking styles.

In March 2025, the iconic Ghibli style collided with the gen AI behemoth.

ChatGPT got an update that could convincingly recreate the Ghibli look. And the internet ran with it, cranking out Ghibli-ized family photos and memes.

The backlash from artists and Ghibli fans was intense.

Al won’t make your photos Ghibli. Ghibli is hand drawn and each character has insane emotional depth. As scary it is to admit the result looks decent, it's nothing like Ghibli and will never be. Big fuck you to Al. This is a terrible advancement of technology.

Some of this anger was misdirected. Most people weren’t trying to replace Ghibli, or even make art. They were playing. They wanted to see themselves in this beautiful world, they wanted to be a part of something magical.

But the critique still stands. Something vital is missing from these images... and all AI art.

These outputs are undeniably impressive — the colors, the textures, it all looks right. ChatGPT was trained on greatness, and it shows.

But look closer and the cracks appear. A hand with four fingers. Things that look right at first but make no sense on closer inspection. Expressions that look simple and generic.

Now contrast this with that four-second shot from _The Wind Rises_.

Every frame was hand-tuned by Miyazaki himself.

Notice that everyone here has a story. A group struggling to move a cart. A panicked horse. A mother shielding her children.

In a Ghibli film, everything has purpose. Everything has meaning. Everything is cared for.

And you feel this when you experience their films. You can feel the life of another person. They were here.

You can also get this with a great song. A perfectly designed app. A meal made by someone who loves you. That sense that a person meant for this to matter.

These experiences have depth. You can sense the unmistakable presence of a human soul, shaped by their life, their taste, and their search for meaning.

AI images don't have depth. They resemble depth.

What AI gives you is the average of everything it’s seen.

And greatness stands out from the average, but even masterpieces have plenty of ordinary parts.

Master artists have worked this way for centuries.

The Renaissance painter Michelangelo painted the most important parts himself. But assistants filled in less important sections, like backgrounds and drapery.

Right now, that’s where AI is most impactful: helping with the ordinary.

With AI you can run your own workshop, your own studio, staffed with infinite, tireless assistants.

You may not make a Miyazaki masterpiece, but you can make something greater than what you could have done on your own, and perhaps even deeper and more meaningful.

And that famous Miyazaki quote? It's not even about AI art.

He wasn’t reacting to the technology — he was reacting to the content: this grotesque, flailing humanoid crawling across the screen.

After he watches this clip, he then talks about a close friend with a disability and how much he suffered.

What offended Miyazaki wasn’t how it was made. It was the lack of empathy, the lack of care, the lack of soul.

That's what he’s really afraid of. That's what we're really afraid of. Not machines, not styles.

We’re afraid of a world without soul.

Close

We are the very first humans to confront machines that learn from us... and remix us.

It’s a collective existential moment. And whether we’re amazed or afraid, many of us are saying the same thing:

“Yeah guys, I think we're cooked."

But to me, human creators seem very far from done.

I used gen AI more in this video than ever before. And it is light years from replacing me. Even this... is mostly me. It's my voice. It's based on video of me.

What AI did was change how I work. It's faster, weirder, more expansive. It extended what I could do.

That’s the future I see, a collaboration. A blend of human and machine, where AI handles the ordinary, and we bring the extraordinary: the depth, the meaning, the soul.

What we all need to do is something bold: let's see AI as it is—no panic, no hype.

Let's unpack what AI really can do. And use it to create work that matters.

Continue the Journey

Hi everybody—if this resonated with you and you want to keep going, I’ve got something for you.

I’m running a 5-week live cohort class called Infinite Remix Live, plus a one-time 3-hour workshop.

These are all about using gen AI tools like the brand new ChatGPT-5, Claude, Midjourney, Veo, and more to create faster and smarter while keeping your human voice at the center.

It's for creative professionals, marketers, educators, writers, filmmakers, entrepreneurs—and the creatively curious.

If you missed enrollment, no worries. You can sign up to get notified about future classes, explore our on-demand courses, or dig into some great free content.

Hope to see you there.

Infinite Remix Live — a 5-week deep dive with a small group.

Infinite Remix Spark — a single, fast-paced 3-hour session.

Hope for Media Creatures

Like many of you, I'm a media creature.

Growing up in a rural area, I found my teachers in albums, films, and books that somehow made their way to me. They were transmissions from other worlds, other ways of thinking. They fundamentally shaped who I became.

And I’m still that kid, always searching for something real, something that reveals one of life’s secrets.

Now I watch AI-generated content flood every platform, and I wonder: What happens when the next generation of seekers only finds content with no soul, no insight?

In this episode of Dream Logic, I explore this tension between the media that forms us and the slop that might deform us.

Kirby

Please share with the curious.

Created: Kirby Ferguson & Karin Fyhrie

Title Design: Yeun Kim

Music: “Coquina” by Green-House

Wrestling with Originality

If you didn't make it yourself, can you really call it your own?

In this latest episode of Dream Logic, Karin talks about how working with AI feels like a wrestling match, a constant push and pull, a struggle to coax something fresh and original out of a system that wants to create the common. In this new episode of Dream Logic, we explore what this metaphorical grappling with generative AI feels like, how to build characters through hyper collage, and what we can really call our own when it comes to art.

Please share with the curious.

Imagination Isn't Algorithmic

Like most other creative people, I don’t feel fantastic about AI art.

I don’t know what it will do to the marketplace.

I don’t know what it will do to creative expression.

I’m concerned about the rising tide of slop.

And I’m truly worried about the vortex of highly believable bullshit it will unleash.

But I also know this: generative AI is here to stay.

Powerful technologies don't just disappear. All of us, in our small ways, must make generative AI the best it can be.

In this episode of Dream Logic, "Imagination Isn't Algorithmic," I explore what's worth appreciating in AI art, how it can be a mirror of us, and why art has always been artificial. The most essential part of creativity is unknown, even to the most perceptive mind. And that, I believe, is a good thing.

Please share with the curious.

New video series collab: Dream Logic

Dream Logic: Exploring the horizons of creative work

This is something different from me. A collaboration with my pal Karin Fyhrie, an attempt to do something different and hopefully beautiful in collaboration with AI. It’s the start of a conversation: musings, artist interviews, and long looks into the strange, evolving landscape of new creative tools.

In this episode, Karin reflects on the unexpected freedoms and failures of this medium, and what she's noticed among peers in the design world. What it makes possible. What it doesn’t. It’s an open invitation to think differently.

Season 1 episodes drop weekly. Subscribe to our Substack to stay up to date:

AI image generation has arrived

My official assessment of AI image generators has always been this: they’re impressive and fun, but not ready for real creative work. You could maybe get something usable occasionally, but overall, DALL-E, Midjourney, and the rest were toys, not tools.

OpenAI’s 4o image generation (formerly DALL-E) is the first AI image generation that is a genuine tool. Gotta say, I’m shocked. I didn’t expect to be here yet. Here’s what’s changed.

Prompt: a perfectly realistic portrait photo of an average looking 40 year old korean man

It creates realistic (enough) portraits

Portraits of people now look real. Well, real enough. The image above does look a bit plastic, a bit too right, but 99%+ of human beings will think that is real. If I saw that image in a profile photo, I wouldn’t give it a second look.

Part of this realism is that ChatGPT 4o can generate regular-looking people, rather than defaulting to models from a Louis Vuitton ad. AI-generated portraits have mostly been excessively beautiful. Below is a pretty typical “Midjourney lady.”

Just your average Midjourney lady kickin’ back

Professional-level type

The text rendering in 4o is a giant leap. DALLE’s type was simply dreadful. With a single upgrade, it’s vaulted to the level of a good professional graphic designer. 4o does still make mistakes, especially if it sets a bunch of type. But for titles and brief bits of text, it’s quite reliable and gives good results.

Prompt 1: set the words Everything is a Remix in a gothic typeface, white on black

Prompt 2: in the background, place a 1950s style color image that looks like something from a criterion collection film

Sketching + prompting

For visual work, prompting is a blunt instrument. I’ve often referred to AI image prompting as “spaghetti at the wall.” No matter how detailed your prompt, you never know what you’re gonna get.

In 4o you can fluently combine sketches and prompts. This means you can sketch out your idea, then write a prompt describing how to execute the sketch. The result is a more precise way to art direct the AI.

Here’s an impressive result from X user EP.

Beyond single images

Past image generators were limited to full-frame images and couldn’t do layouts or infographics – at least, not unhorribly. The leap in quality in 4o is just as big as the one in type.

Again, this is very practical stuff. Layouts and infographics have endless real-world applications: thumbnails, directions, ads, training, social media graphics, reports, and loads more.

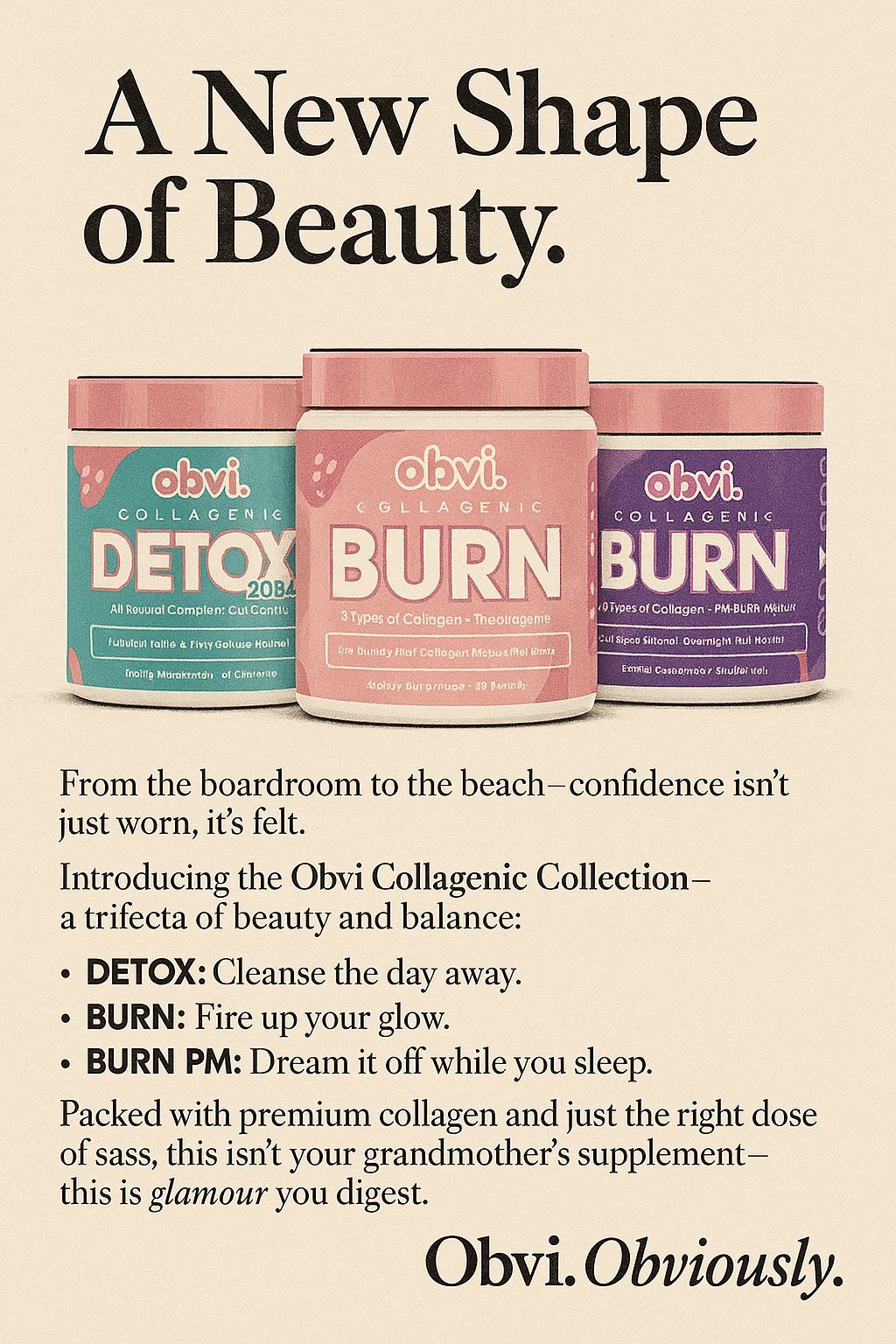

Here’s an example ad from X user Jacob Posel. ChatGPT also wrote all the copy. (Note that the product shot is garbled, but you could replace that with the original image.)

Prompt: Create a madmen-style print ad using this image (with uploaded product shot)

Extremely weird photo editing

Source: Unsplash.com

Here’s where things get weird. Let’s say you upload an image, like the one above.

Then you say “remove the black man’s hat.”

Wow, right?

But look a bit closer and compare those images. It’s not the same image. It’s now an AI image, with that same slightly off feeling.

And here’s the weirdest weird part: that black guy is a whole different dude now. He looks similar to the original person, but it’s definitely not him. This applies to everybody in the image.

And what’s this guy’s problem?

This guy is unimpressed.

When you upload an image for editing in 4o, it recreates the whole image and AI-ifies it. The effect is glaring with faces because we humans are extremely attuned to faces. With images of animals, objects, and natural scenes, the effect is less obvious. So where you can use this feature is limited, but it’s powerful nonetheless.

Mindblowing but still glitch-galore

Let me be frank: 4o image gen is plenty glitchy. Every session I spend with it is littered with hopelessly messed-up images. For example, I’ve tried to create “spaghetti being thrown at a wall” with every image generator. 4o’s result is the best I’ve gotten – and it took some back-and-forth just to get that.

Prompt 1: a 30 year old man throws a fistful of cooked pasta at a blue wall

Prompt 2: make his hand open, like he is throwing. make him less angry. he's just focused on throwing.

Prompt 3: make his arm blurry, like the shutter speed is too slow for the fast motion of his arm

If you’re accustomed to the reliability of traditional graphics apps, you’ll want to lower your expectations a bit here. (4o image generation is also way more error-prone than ChatGPT’s text generation.)

But 4o can do real work right now. If you already have visual skills, it can do important support work. And if you’re not skilled with visuals, 4o can create the practical graphics that we all need on occasion. For example, if you have to to create a little promo graphic for a social media post, 4o will almost certainly do that job a lot better than you would.

I’m placing a bet on image generation

I’m so impressed with ChatGPT 4o image generation that I’m going to place a serious bet that this technology now matters. More about that shortly.

What Ghibli films and Ghibli memes share

The Wind Rises (2013)

The four seconds of film above is the result of fifteen months of painstaking animation by a single artist under Hayao Miyazaki’s watchful eye.

And this image, created in the same style, was likely made in less than a minute.

Created by Grant Slatton and GPT-4o

This image was followed by untold thousands of memes emulating the same illustrated look, and even a full trailer for the 2001 version of The Lord of the Rings.

Studio Ghibli and its resident visionary Hayao Miyazaki are the originators of this style. Their masterpieces include films like My Neighbor Totoro, Princess Mononoke, and Spirited Away.

The Ghibli memes that were suddenly everywhere were produced by OpenAI’s highly impressive GPT–4o image generation (which I will have much more to say about next week).

As social media overflowed with AI-generated images mimicking Ghibli’s distinctive aesthetic, we witnessed a fascinating and historic collision between one of animation’s most labor-intensive traditions and the instant gratification of AI art.

And yet, the difference between the Ghibli films and the Ghibli memes is not so much about methods as it is about effort and commitment.

To be clear, Hayao Miyazaki is a genius and the Ghibli meme makers are not. But they still share something deeply meaningful, not just superficially, but creatively.

Craft vs Click

When it comes to effort, there is a vast chasm separating Ghibli films from Ghibli memes.

The Ghibli memes are made quickly and easily. Even the LoR trailer only took nine hours.

Studio Ghibli’s films are lovingly and laboriously hand-crafted over three to five years.

But as I covered in Everything is a Remix, meme-making is creative. It’s just the very first steps on a very long and demanding path.

The meme makers did something simple but powerful: they copied, transformed, and combined existing work to create something new.

Grant Slatton, who created the family portrait above and sparked this craze, just loved Ghibli films and wanted to see his family transformed into Ghibli characters.

Later meme makers were more interested in combining the Ghibli style with other works, primarily memes. What would the gentle nostalgic style of Ghibli look like applied to the devil-ish Disaster Girl meme?

The Ghibli memes came from regular people who took a moment from their day to have fun, and incidentally, they did something creative.

The meme Disaster Girl converted to Ghibli style

The Long, Long, Very Very Long Road

Hayao Miyazaki had a similar moment of incidental creativity untold decades ago. He did some rudimentary creative act while he was playing. But then he kept going. And going. And going.

Over time, these acts became bold and sophisticated. He merged the aesthetics of ukiyo-e woodblock prints into this work. The result can be seen in My Neighbor Totoro. He merged Alice in Wonderland and The Wizard of Oz with Japanese folklore. The result was Spirited Away.

What distinguishes Miyazaki is not so much how he created but how far he was willing to travel down the long, winding path of creativity until he finally started creating enduring art.

The rest of us may never get there, but we can travel at our own pace, go as far as we like, have fun along the way, and maybe even make enduring creative work for ourselves or our communities.

A woodblock print by Kawase Hasui. This style was one of the foremost influences on Hayao Miyazaki.

Poster for The Sting (1973) vs The Studio (2025)

Central image is totally different, but everything around that is taken from The Sting poster. In my opinion, it copies too much from one place.

Beyond Uncanny Valley

2D Akira vs 3D Holly

The uncanny valley is the sensation of unease we feel when a human character looks close to but not quite human.

For example, the character of Akira, is not realistic at all. We easily connect with this character and relate. No uncanny valley.

The character of Holly from The Polar Express looks more like a real person, but is not relatable. She feels strange and ghost-like.

This is the uncanny valley. When human characters are kinda real but obviously not real, they have an eerie or even repulsive effect on us.

The Luke Skywalker cameo in The Mandalorian was uber-uncanny

Computer-generated imagery has been around for at least forty years, but it has never conquered the uncanny valley. There has never been a truly believable CGI human character. Anybody who saw the conclusion of season 2 of The Mandalorian got treated to a Luke Skywalker cameo that didn’t look much better than The Polar Express.

The closest there’s been to believable CGI humans are human-like characters in movies like Avatar: The Way of Water or Kingdom of the Planet of the Apes. These characters don’t feel uncanny because they’re not human.

The main reason the apes in Kingdom of the Planet of the Apes looked so good was just because they’re not people.

AI is doing what CGI could not – it is crossing the uncanny valley. AI is generating human characters that are indistinguishable from real people.

There are now AI-generated still images of people that look real. Head over to ThisPersonDoesNotExist, and with every refresh of the page you’ll see a new portrait of a synthetic person. There are often small imperfections but these images are good enough to fool basically everyone.

Real? Not real? He’s not real but I can’t tell. Via ThisPersonDoesNotExist.

And we are now getting AI-generated video of realistic people. For instance, a new feature in the AI video app Captions creates realistic bloggers. If you watch these clips long enough, the illusion doesn’t hold, but in shorter durations, you can be fooled.

The vloggers generated by Captions look real, especially in short clips.

We are traveling beyond the uncanny valley. What comes next?

I can foresee two realities that will co-exist.

We will still very much have the uncanny valley. AI-generated humans mostly do not look real and creators won’t be focused on making perfectly realistic people. This is mostly what we’re going to see for a long time to come.

But sprinkled out there among the uncanny will be fake people who look real. “They” will be sharing their experiences, instructing us, persuading us, selling us, and seducing us.

What does this mean? Like everybody else, I’m still grappling with this emerging reality. I’m happy to hear your thoughts in the meantime.

But this I’m certain of: those old uncanny valley characters like Holly will soon feel nostalgic, emblems of simpler times when you could trust your eyes.